Or, where to go to get checkmated

I quite like chess, however I’m also rather bad at it — you can prove this for yourself if you want by challenging me to a correspondence (“daily”) game on chess.com.

Still, just because I’m not great at chess doesn’t mean I can’t contribute to a good game. I’m not sure where the concept of “vote chess”, where a team of humans vote on the next move, originally came from; certainly it’s been a thing on chess.com for a long time and I assume it also exists elsewhere. Recently though I implemented my own version on Mastodon.

For the uninitiated Mastodon is a bit like twitter, but with much better moderation, no ads, and vastly fewer terrible people. You can find me on there these days at @PetraOleum@cloudisland.nz — I heartily recommend all Kiwi readers (who aren’t terrible people) consider joining the pateron-funded Cloud Island server, though of course accounts on one server can communicate with any of the others. Mastodon is also only one program on the wider interconnected Fediverse; another is Pixelfed, a decentralised and ad-free Instagram alternative.

My vote chess bot is, appropriately enough, located at @VoteChess@botsin.space. It plays a move every hour based on followers’ poll votes, responding with a move generated by the chess engine Stockfish. Stockfish is also used to generate poll options and to adjudicate voting draws, and while the follower base of the bot is still small it is currently set up to thereby give the human players a slight advantage. The bot is written in Python using the Mastodon.py and python-chess libraries. The source code for the bot can be found on Github, although at time of writing the codebase is in need of a bit of tidying.

Over the last few months we’ve played more than two dozen games at one move per hour, collected in an automatically updated PGN file downloadable from my website. PGN (Portable Game Notation) files are human and machine readable text files that record the moves and metadata for one or more chess games. Chess analysis is of course a big thing but my thought was: can I convert the PGN file into a dataset I can analyse statistically?

So far as I can tell R has no native chess support, so it would make sense to use Python for this. However what R does have is a library called reticulate which allows a surprisingly simple Python interface, and I’ve been wanting to play with it for a while.

Working with reticulate turns out to actually be

fairly easy. Imported objects and functions are called

from their libraries with the R $ operator,

and many of the more basic types are seamlessly

converted at the boundary between the two languages. For

example, this is how I’m loading PGN files into a list

of game objects:

library(reticulate)

# Make sure we're using the right Python!

use_python("/usr/bin/python3")

# Library imports

pylib <- import_builtins()

chess <- import("chess")

pgn <- import("chess.pgn")

# Parse pgn file to a list of pgn objects

readPGN <- function(pgnFile) {

pgn.file <- pylib$open(pgnFile)

games <- list()

lgame <- NULL

repeat {

lgame <- pgn$read_game(pgn.file)

if (is.null(lgame)) {

break

}

games <- append(games, lgame)

}

pgn.file$close()

return(games)

}Reticulate also allows you to access the output of python scripts, and include python code blocks in rmarkdown documents — for a little bit of the latter, see later in this post.

I wrote some helper functions to construct columns for a data frame out of the game list. This isn’t the most computationally efficient way to do it, as many columns require duplicate computations, however it is a lot tidier than a row-by-row method. The functions return everything from the date of the game to the number of pawns remaining on the board at its end, although there are still more things that I could add.

At time of writing we’ve played 28 vote chess games in a little over two months, a good start on a larger database. From the perspective of the human players our record looks like so:

| Human Colour | Total Games | Wins | Draws | Losses | Checkmates | Median turns |

|---|---|---|---|---|---|---|

| White | 12 | 8 | 3 | 1 | 9 | 52 |

| Black | 16 | 10 | 0 | 6 | 16 | 60 |

There’s currently no support in the bot’s code for either side resigning in a losing position, so games tend to go all the way to the checkmate. Support for draws also needs more work, although if there are few enough pieces on the board the bot does currently query a Syzygy tablebase for adjudication.

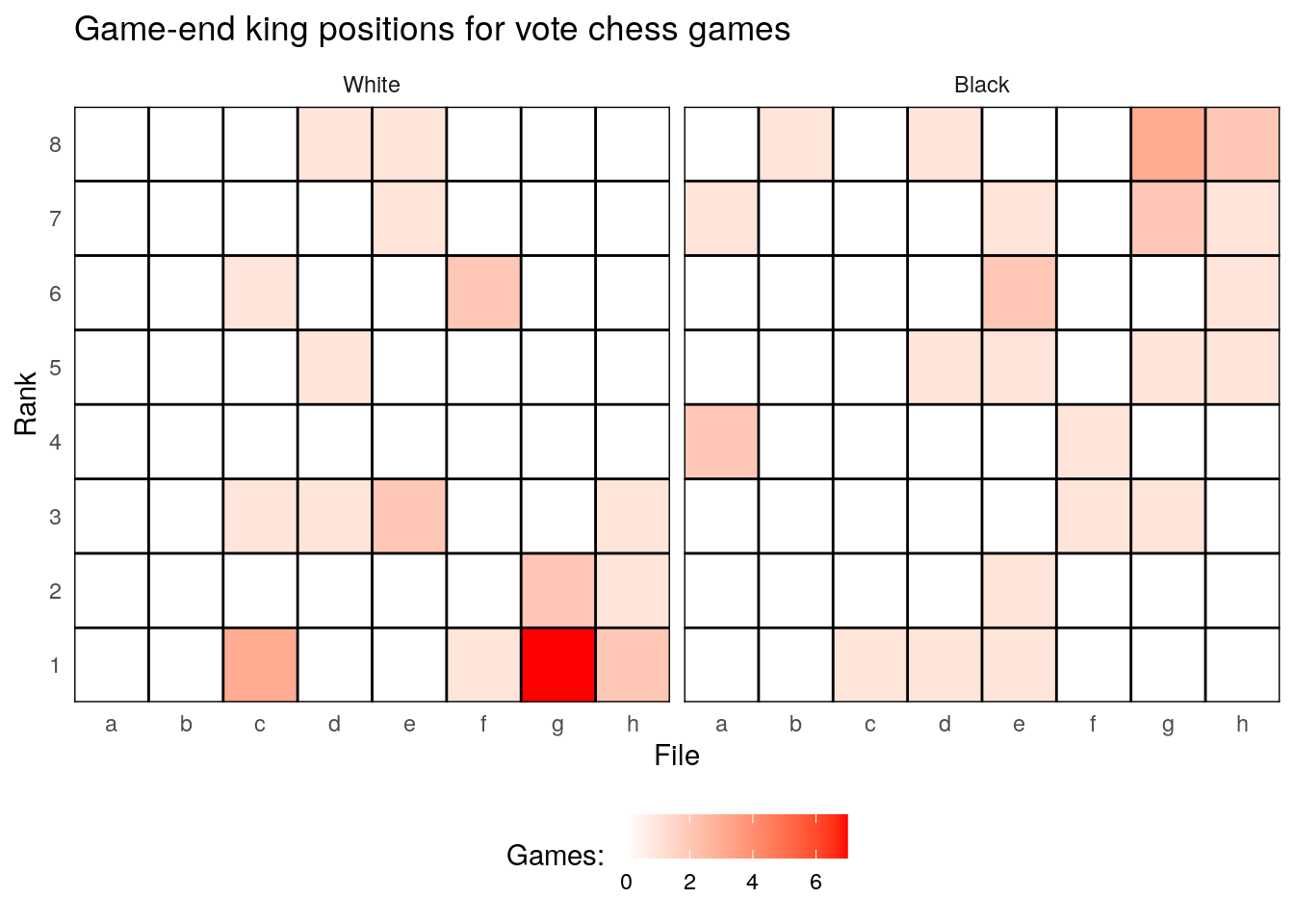

One question I’ve been interested in for a while is what squares pieces are most likely to end up on. The easiest piece to look at is the king, because there’s always exactly one of them on each side throughout the game. Here’s a heatmap of the game-end king positions for each player in all the vote chess games:

The most common locations are g2 (for white) and g8 (for black), which isn’t unexpected: these are the squares the kings are safely stowed on after the kingside castle, and quite often that’s where they remain. Other common spots are nearby, suggesting minor defensive manoeuvres which may or may not have been successful. Still, there’s not really enough data to get a full picture here, and the vote chess games might be typical. I wanted to look for a larger dataset.

The Chess Olympiad is a tournament run every even-numbered year by FIDE, the international chess federation. The 44th Olympiad ought to be happening right now in August in Moscow, however the ongoing pandemic has of course put paid to that and it has been pushed back to 2021. As it happens FIDE is currently running an online olympiad, though I instead went back to the 43rd held in 2018 in Batumi, Georgia, to find the dataset I was looking for.

As I understand it the structure of the Olympiad tournament involves country teams playing each other on four boards (with each team holding a player in reserve). There is both an open and a women’s division; taken together (and dropping a small handful of games corrupted by mistakes in transcription) this gives a datset of 7287 games over 11 rounds!

| Division | Total Games | White wins | Draws | Black wins | Checkmates | Stalemates | Insuff. mat. | Max Elo | Median Elo | Median turns |

|---|---|---|---|---|---|---|---|---|---|---|

| Open | 4036 | 1628 | 1003 | 1405 | 154 | 7 | 22 | 2827 | 2255 | 43 |

| Women | 3251 | 1375 | 664 | 1212 | 431 | 11 | 12 | 2561 | 1894 | 42 |

Notably there are substantially more games in the women’s division that go all the way to the checkmate, rather than ending with a resignation once defeat becomes inevitable. I’ve never been to a chess tournament (for obvious reasons — again, I’m no good at it), but this difference in culture is really interesting.

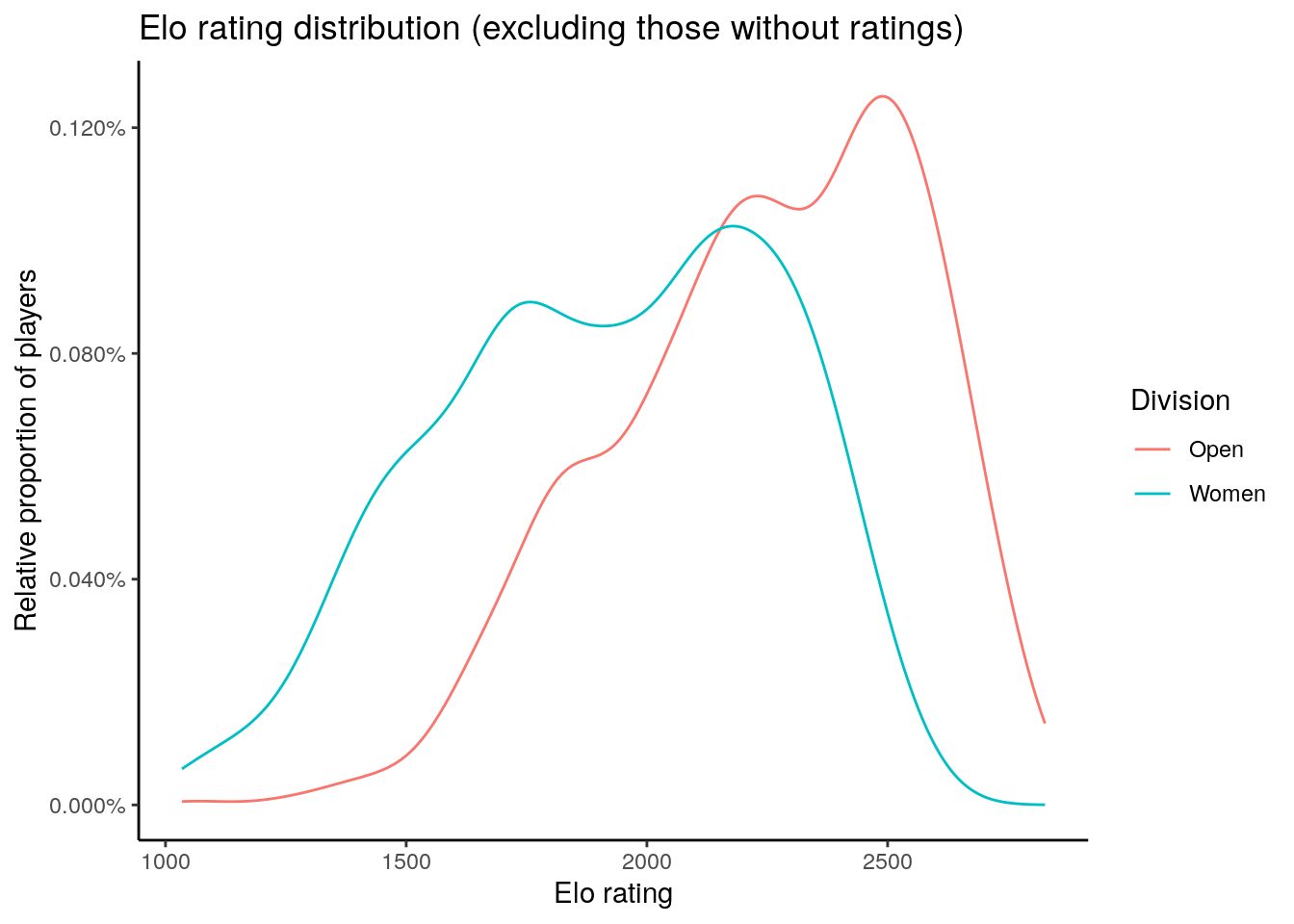

The difference in Elo rating (a numerical measure of relative ability, developed for chess but which is also widely used in esports and some regular sports) ultimately comes down to there being fewer women who play chess than men, and therefore the best five men in each country can be expected to be better on average than the top five women regardless of the underlying distribution of ability. Countries are also more likely to be able to find five men who all have official FIDE Elo scores than five women.

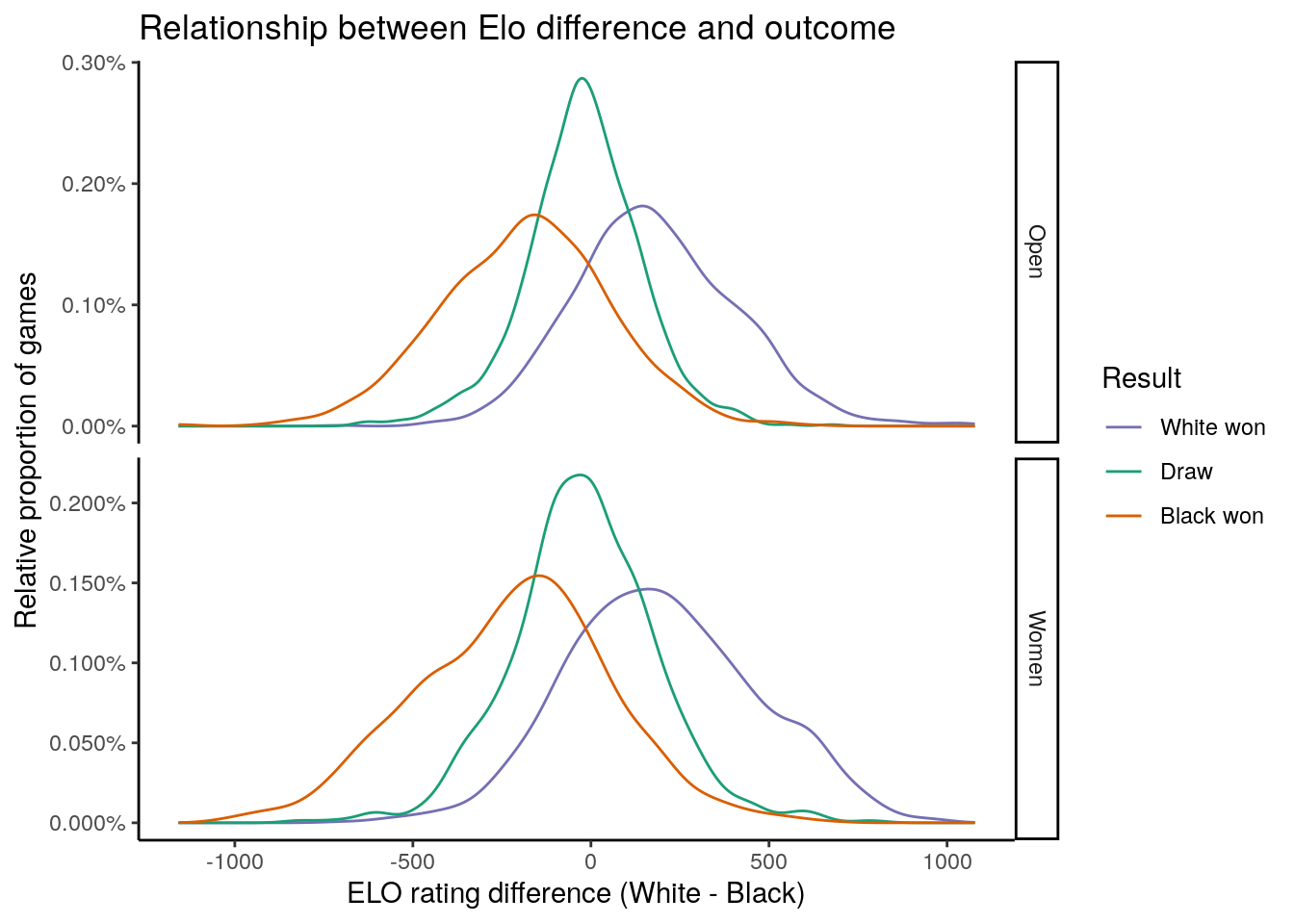

Rating difference correlates with the outcome of the game, but by no means guarantees it. As these games show it’s perfectly possible to draw against, or even beat, a player several hundred points better than you.

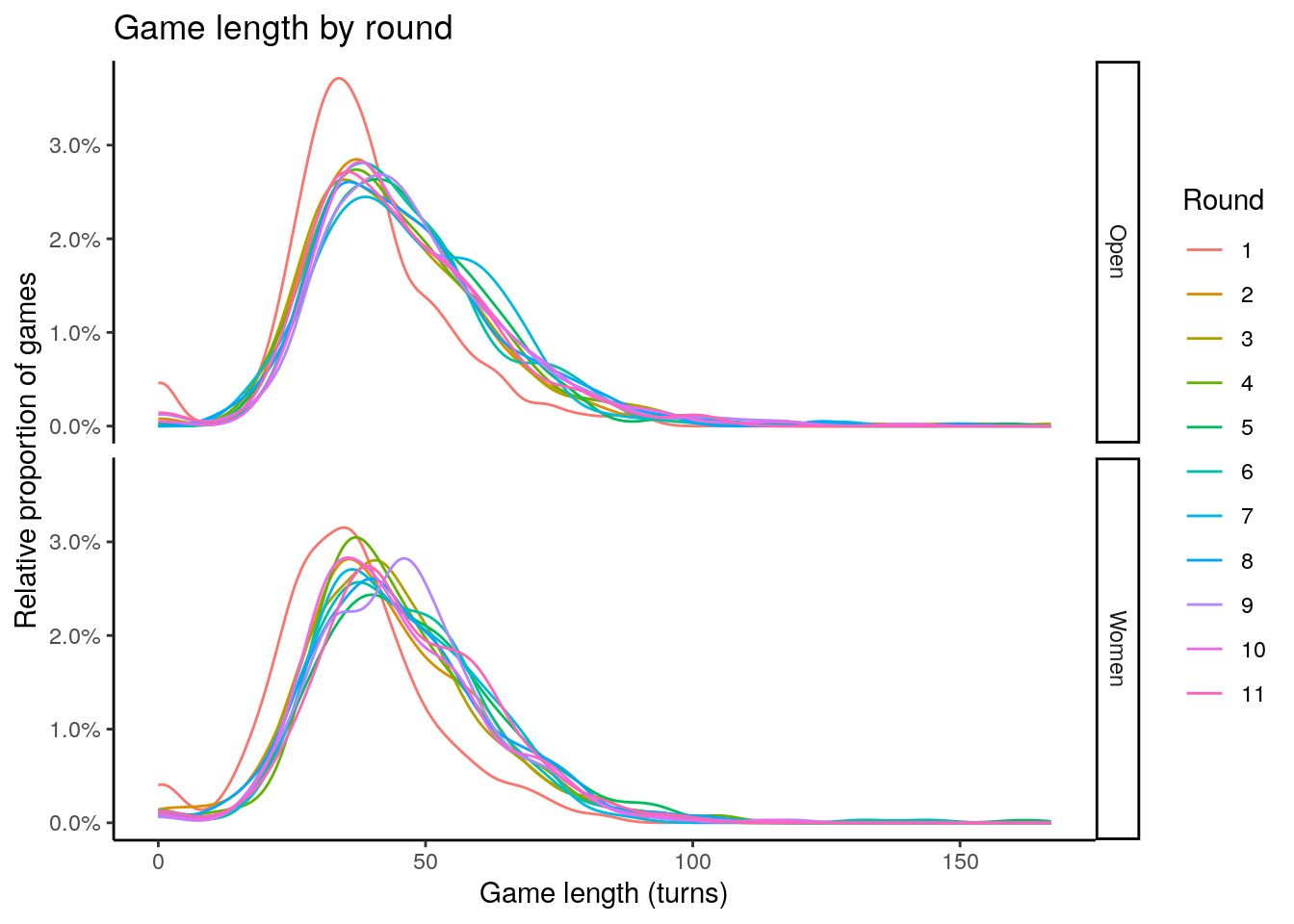

Another difference between the vote chess games and the Olympiad was that the former set of games tend to run 10 to 20 turns longer, largely because only a minority of real life games go all the way to the checkmate or the official draw point. As I mentioned earlier the women appear to go to checkmate more often than the open category, but this doesn’t appear to shift the numbers much or at all.

What is apparent though is that the first round is different to the rest, with more games ending before the second move (presumably because one player failed to show up at the board) but also shorter games in general.

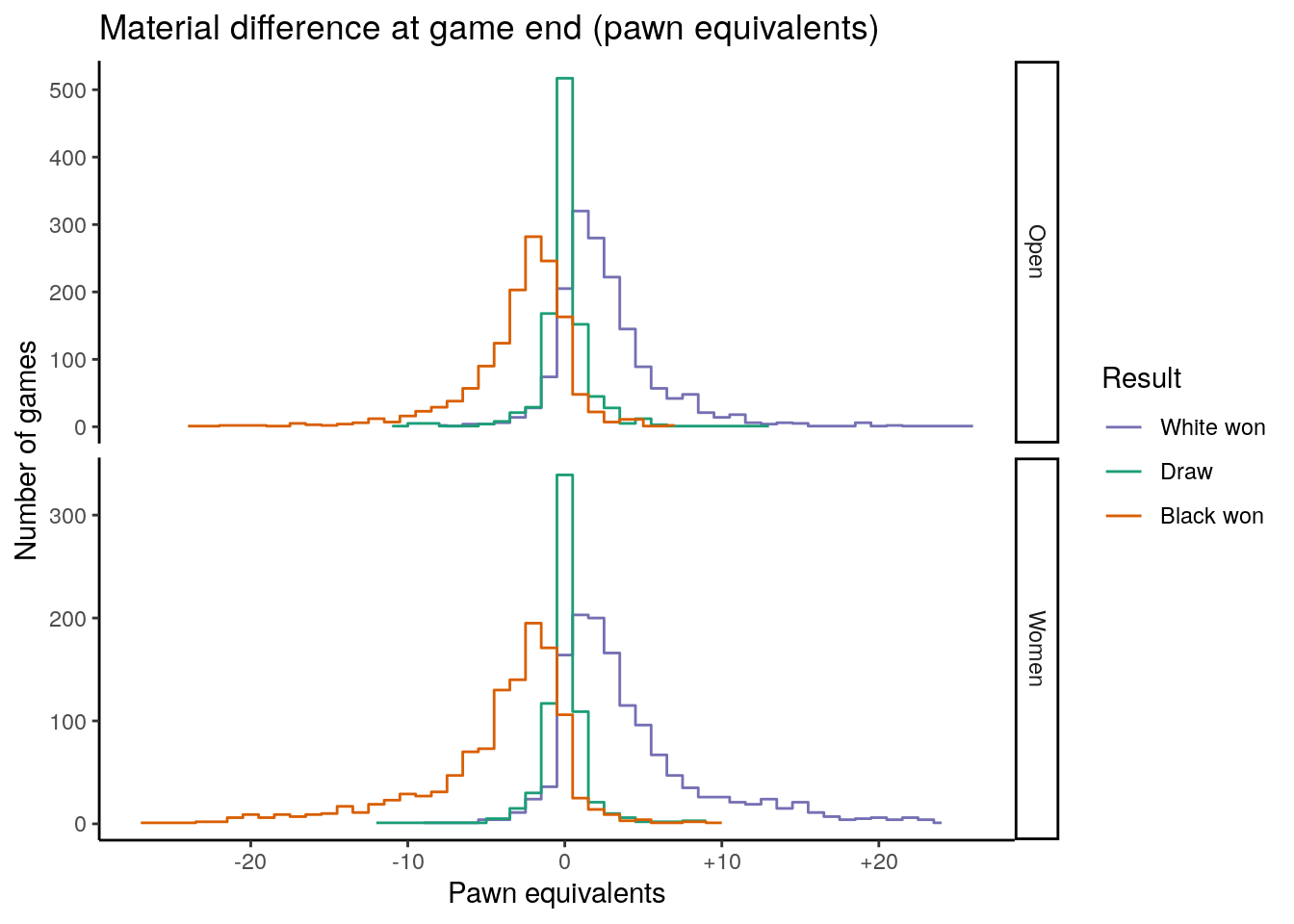

Much as Elo rating doesn’t perfectly predict the result of a game, the pieces left on the board for each side at game end don’t always line up with the result. The standard ranking assigns a pawn at one point, bishops and knights at three, rooks at five, and queens at nine. This rough guide suggests that, for example, knights and bishops are broadly acceptable trades for each other but that a queen is not quite worth both of your rooks.

But this is only a guide: as you can see, it’s perfectly possible (if uncommon) to end the game in a winning position despite not appearing to have the advantage in material. Ultimately the game is about checkmating the king, not taking pieces.

Another thing I can get from these PGNs are the openings of the games: the Olympiad PGN files include handy three-character Encyclopaedia of Chess (ECO) codes. For example in the very first game Wesley So beat Roberto Carlos Sanchez Alvarez as white with the Najdorf Variation of the Sicilian Defence, which has the code B90. I did some fiddling with python code blocks in rmarkdown, and it turns out you can generate a board for this fairly easily:

import chess

import chess.svg

# 1. e4 c5 2. Nf3 d6 3. d4 cxd4 4. Nxd4 Nf6 5. Nc3 a6

moves = ["e4", "c5", "Nf3", "d6", "d4", "cxd4", "Nxd4", "Nf6", "Nc3", "a6"]

B90 = chess.Board()

for move in moves:

B90_lm = B90.push_san(move)

B90_svg = chess.svg.board(B90, lastmove=B90_lm)

print("<div class='chessboard' title='ECO B90'>{}</div>".format(B90_svg))

(This just requires using results="asis"

in the rmarkdown code block to allow printing raw

html.)

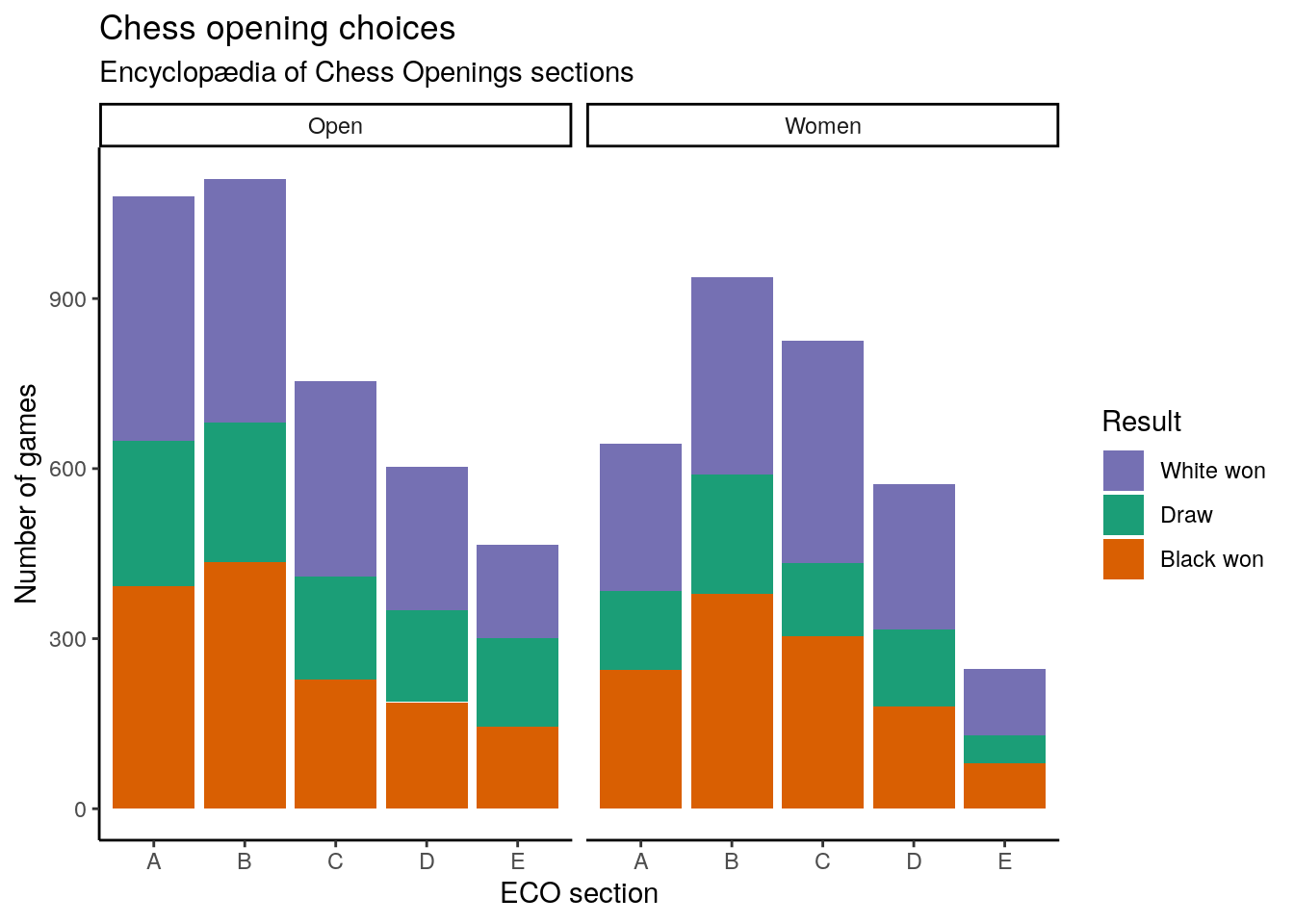

There are too many individual ECO codes to show in one graph, but the five initial letters are more tractable to visualisation.

There are more differences here than I might have expected. Why are “open games” (section C) less common in the open division? A question for another day, possibly.

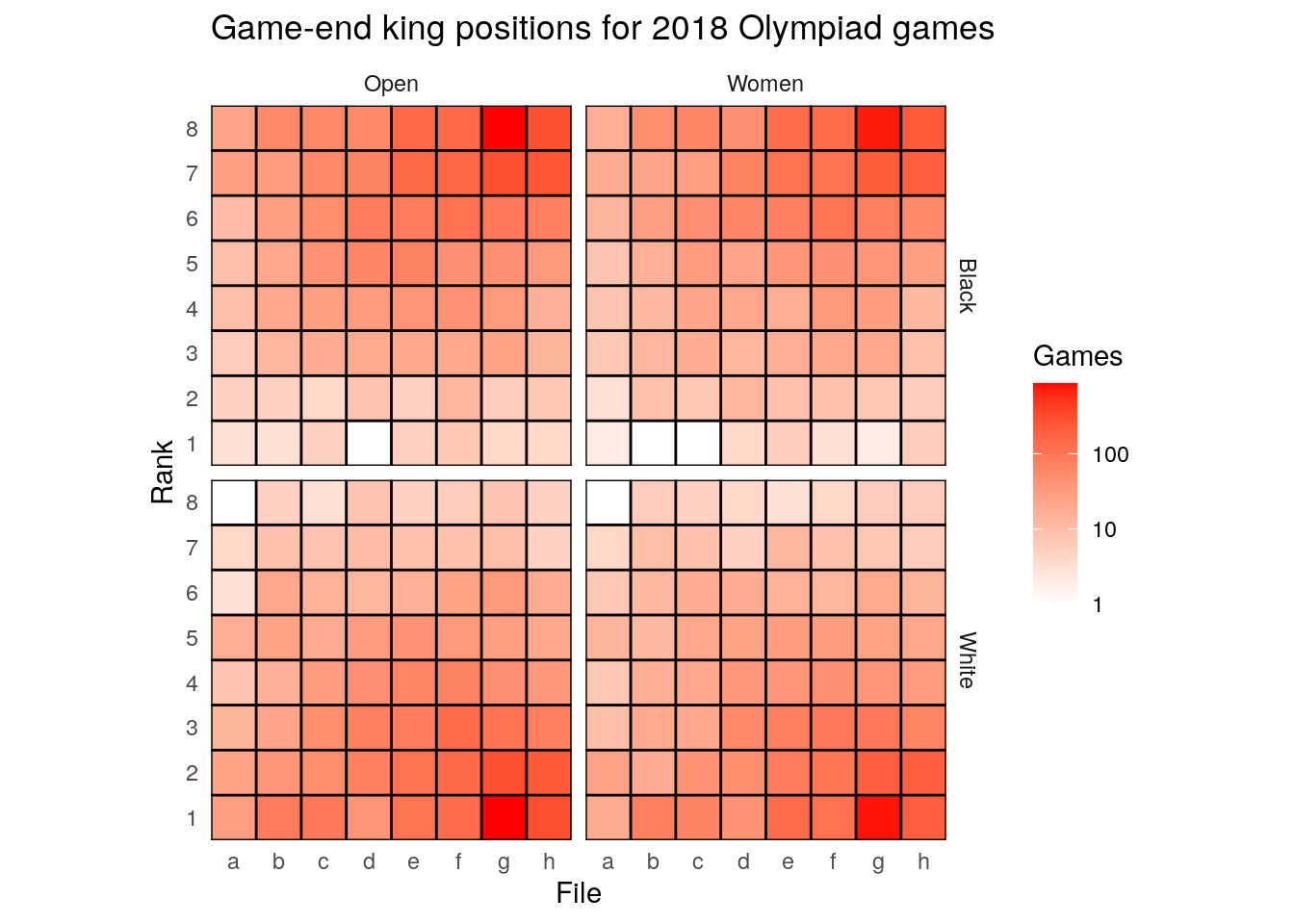

But I was interested in where kings are located at the end of the game.

I put a log transform on the colours here just because the kings so overwhelmingly ended up on that kingside castle position, in both divisions of the competition.

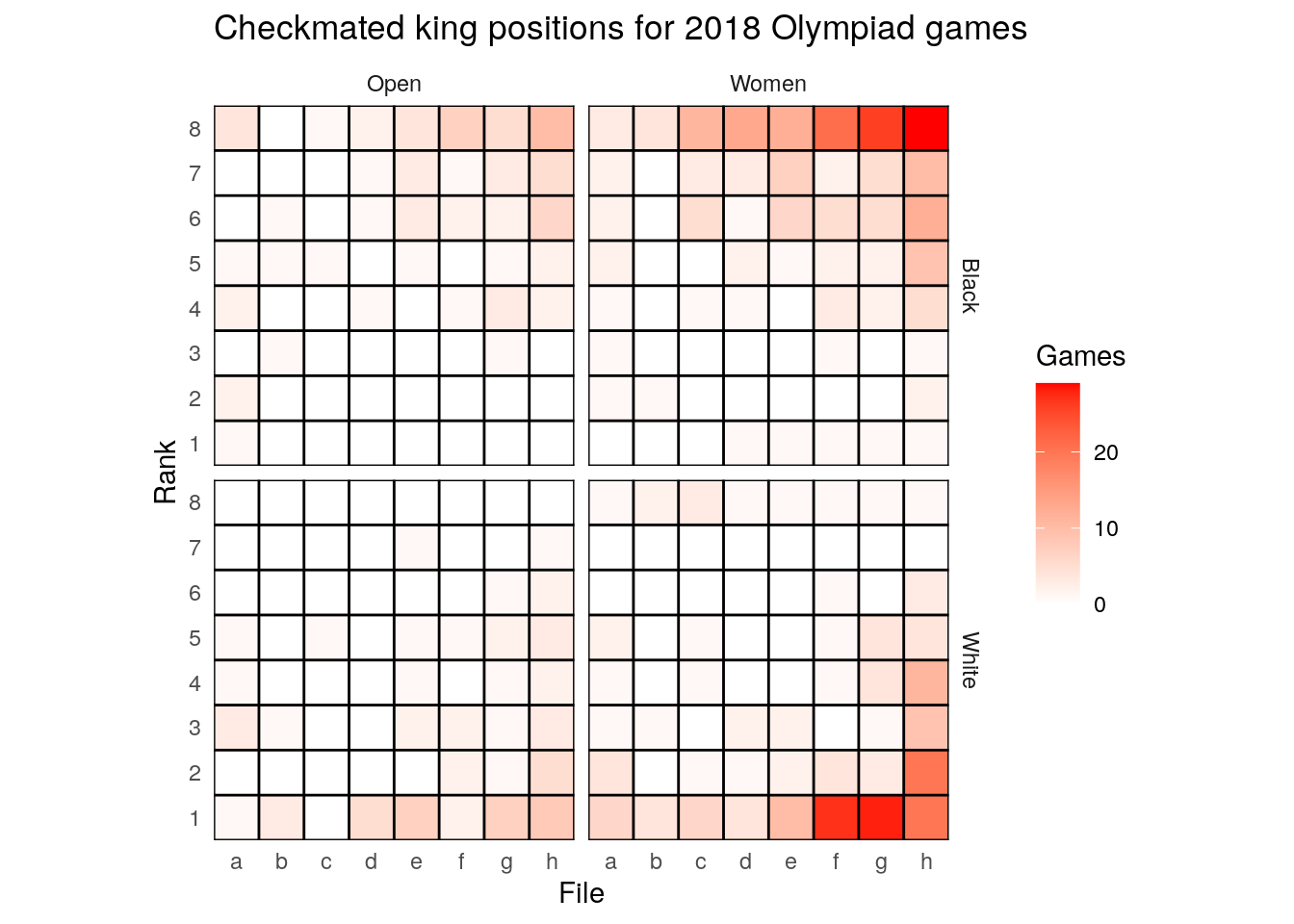

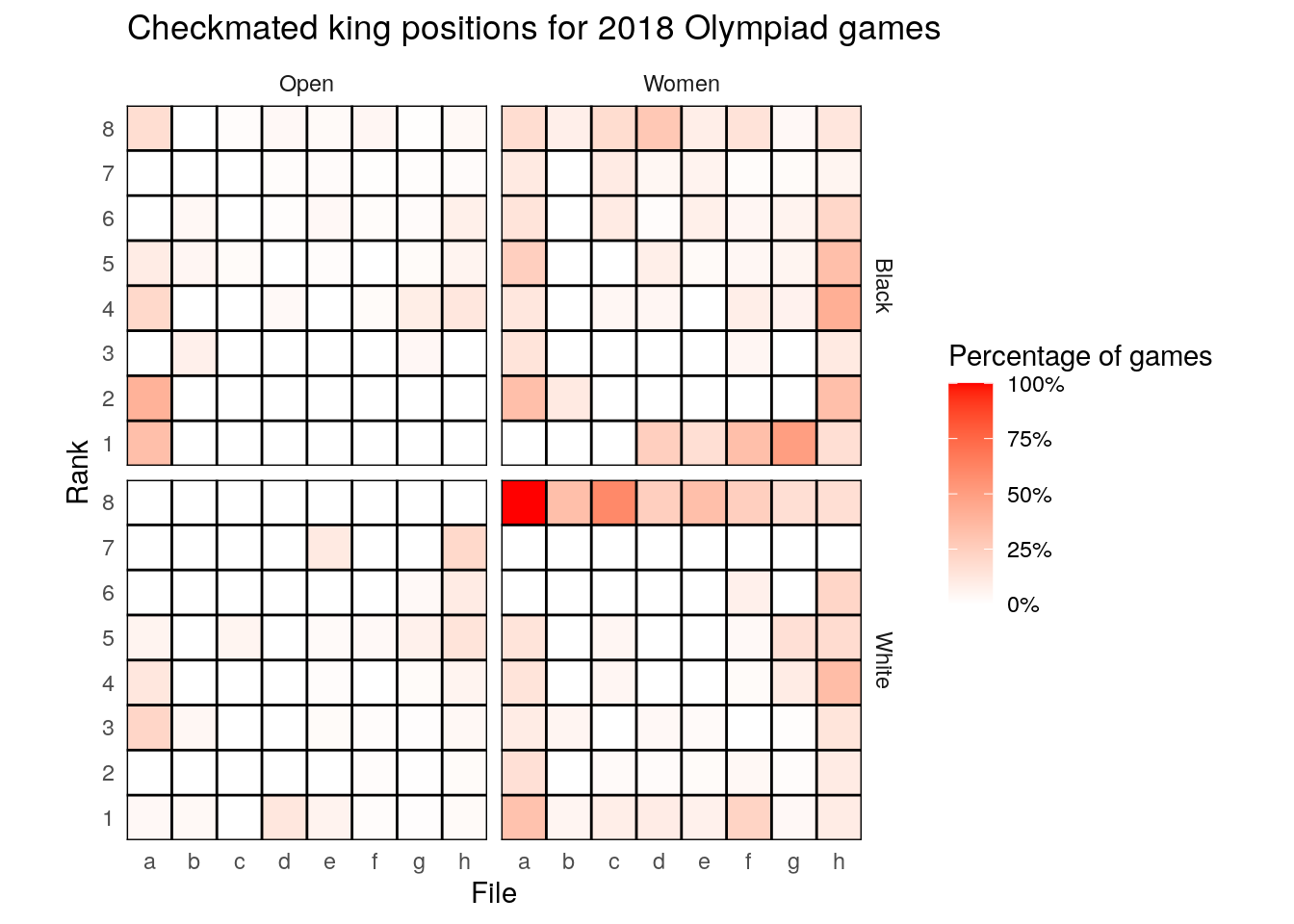

However, suppose I’m only interested in kings that have been checkmated specifically — where should I be if I want to get checkmated?

As already established checkmates were much more common in the women’s division, but in both cases tended to be on the edge of the board in the near-kingside corner. Beware the back rank. Conversely, while checkmates in the middle of the board are rare, this is probably because most players aren’t foolish enough to move their king there before the endgame.

Of course, the back rank is where the king tends to be anyway. Combining the above two graphs to calculate the proportion of times a king finishing in a particular square has been checkmated we get:

There are still plenty of confounders I could look at, along with further increasing the number of games analysed, but the takeaway here is simple: if you want to lose, letting your king be forced all the way to the far rank is a good way to do it.

I’ll probably come back to this at a later date. I wonder: is it possible to teach a computer what a winning position looks like, and could it work out how to create one? How many high-level chess players have blundered into scholar’s mate? There will always be more questions.